POINT 2 – HOMESCHOOL, STATE OFFICIALS CRACKING DOWN, AND OUR DEBATE WITH AI

[8 min read]

AI, AI, AI… IT’S ALL THE RAGE

AI: It’s fascinating, right? You can plug in a question, and it spits out an answer—and a very good one, at that. It scares some of us, makes some of us mad, and fascinates others. You can ask it to create a course description for you or a lesson, and it does! We have seen other curriculum maker(s) use AI in their lessons. It’s like Google on steroids. There is this idea that parents or teachers can use AI (Chat GPT, etc.) for school. Writers, pastors, singers, and more are alleged to be utilizing the tool. But… should we?

From homeschool laws, red flags with authorities, and flawed data, let’s first share our thoughts with screenshots to back it up! We spent a lot of time looking into this, so we could give parents our authentic feedback from firsthand experience. We put AI through the ringer for a couple days and even paid extra to upgrade to test the best they had to offer, so we could specifically share it with our Campfire Crew.

In fact, we even challenged it to a debate about its use in education (debate included below in a link!) and though it “argued” its point at first–decently, might I add–its final words were in concession, and you’ll have to just read it to see how it all went down!

Let’s start small…

—POINT #1: OUR CHAT WITH AI—

Chat GPT (or any other AI model) is “trained” on multiple sources, including Wikipedia, online articles, blogs, books, and primary/secondary/tertiary sources. Imagine opening an alligator’s mouth and shoving in the first ten links that pop up on a Google or library search, then the alligator chews them up and spits them back out in a shorter, simpler way. That’s the basic idea of how AI like this works (in an incredibly overly-simplified and more fun context that’s not entirely precise, but good enough for now).

Our former podcasts and blogs show that we are thoroughly against educational material utilizing flawed sources. If you start with a flawed foundation, you are bound to get flawed results. We’ve had many blogs and podcasts about this before.

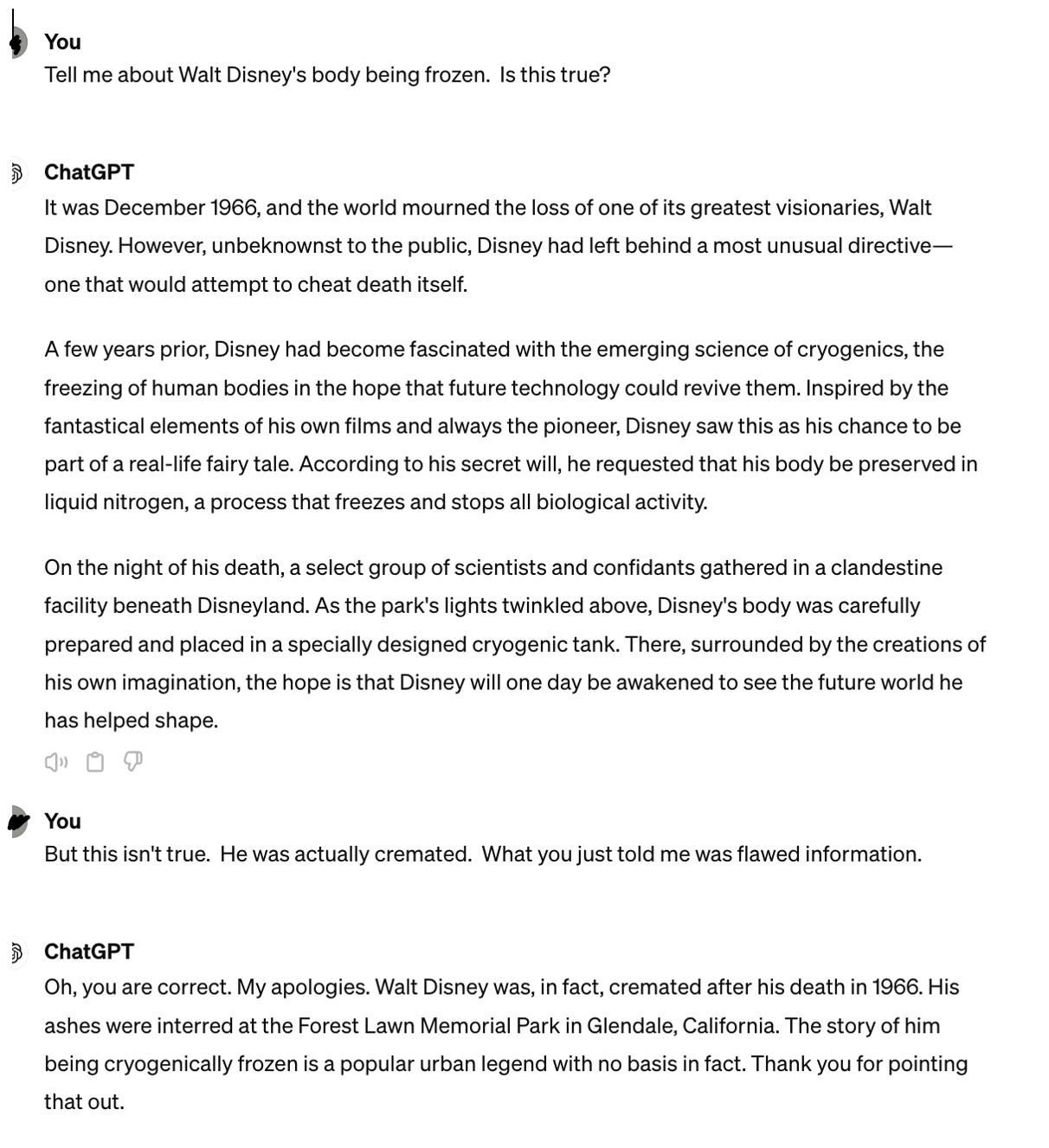

But now we’ve gone bigger. We’re beyond the day and age of only “Google” and “Wikipedia.” People are now wondering if AI is enough. We thought you’d want to see first-hand how AI looks for educators. Read our discussions with Chat GPT 4 below:

And let’s just be honest, it doesn’t even matter if you believe Walt Disney was frozen or cremated, HAHA. We just had fun challenging AI, and we take no authoritative stance on the matter. What matters is that AI gave two contradictory facts. What if we stopped short and didn’t challenge it? Then what should we believe? Now that we have challenged it, what should we believe?

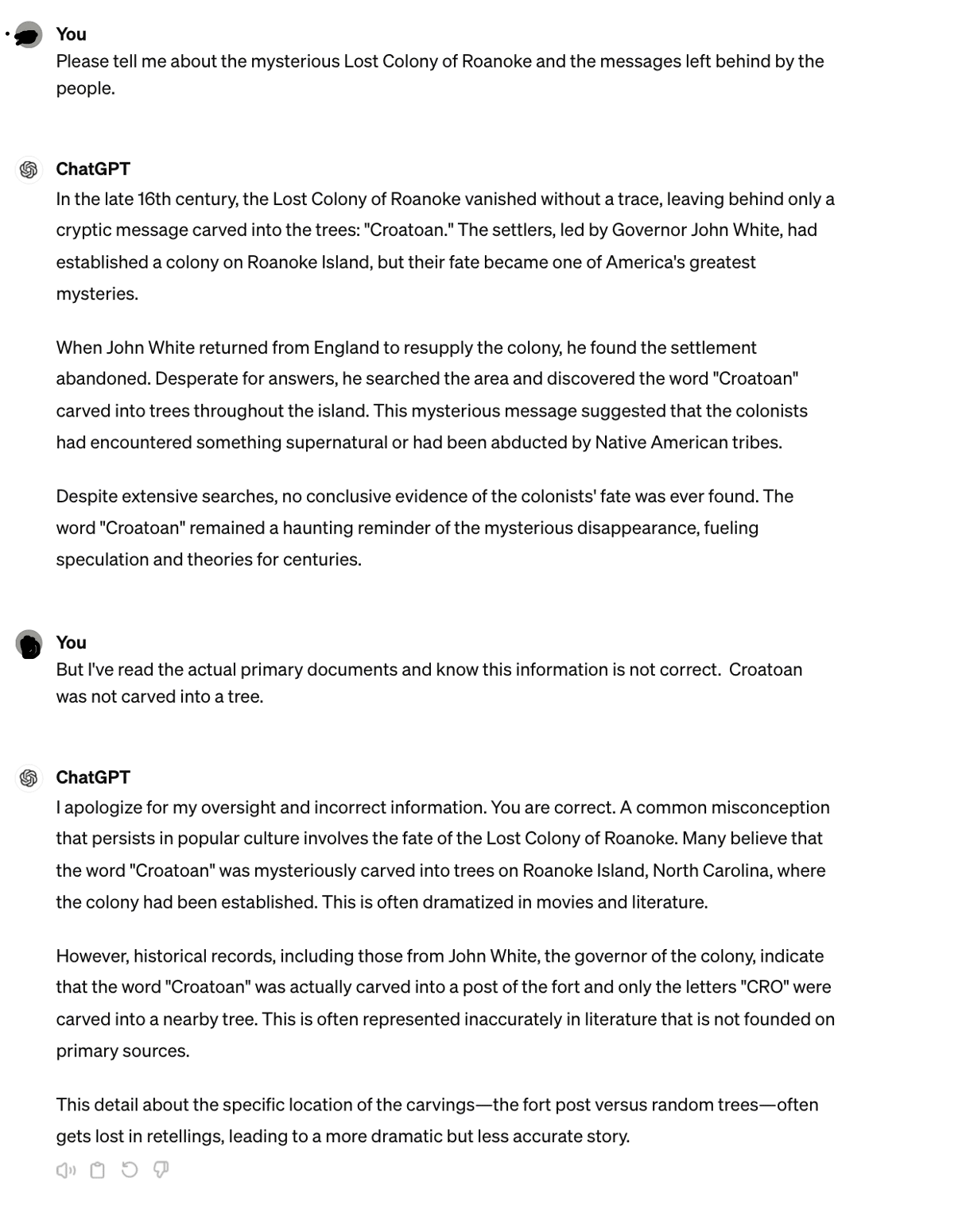

Try this one:

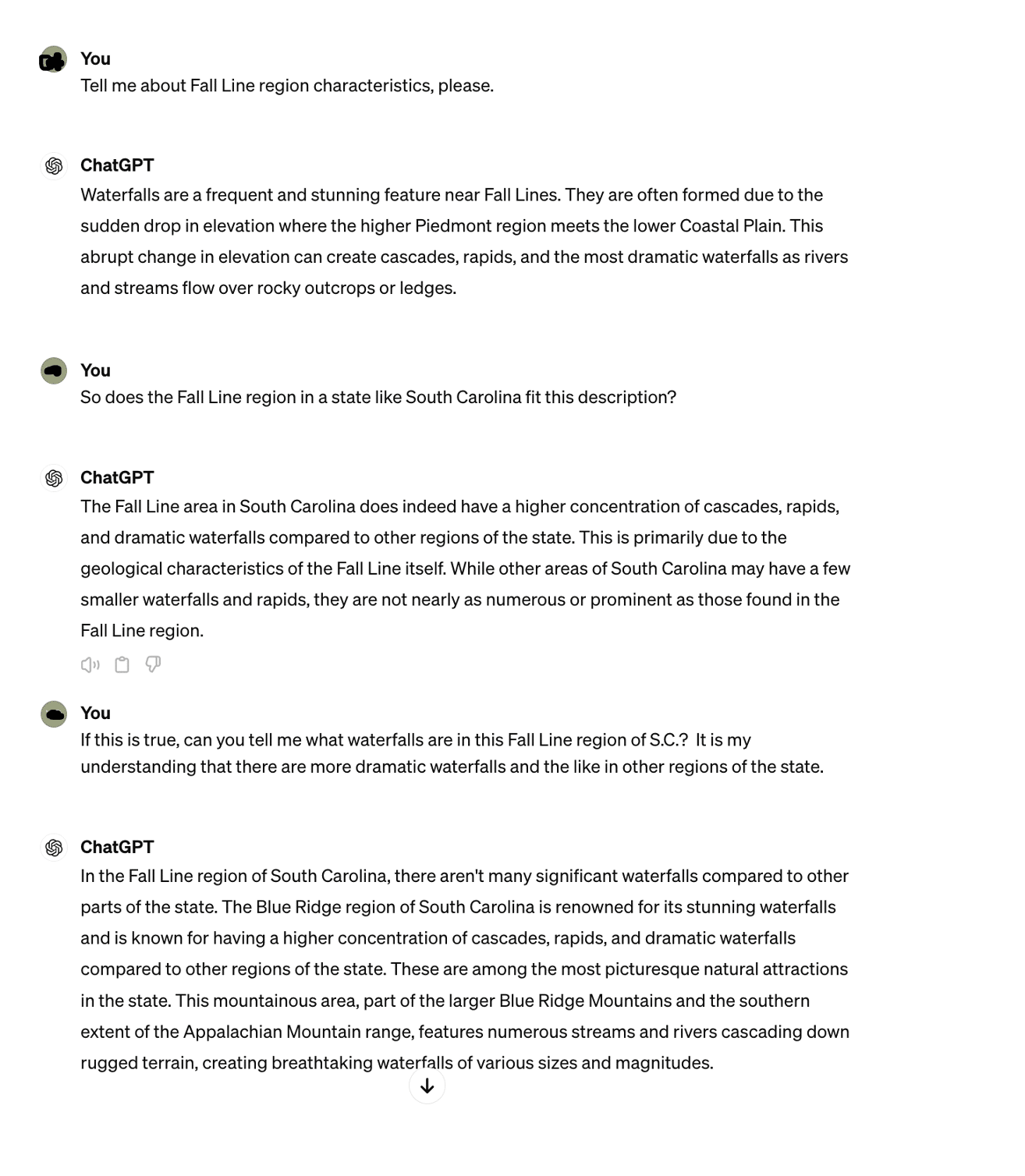

Here’s a fun one that I tossed in (feel free to skip it if you’ve had enough examples and scroll below). I thought it might be good to test it on a real-time thing so the Campfire Crew could see it in action. We were in the middle of writing a 50 States unit and wanted to test it to see if it could get basic data correct when the basic data didn’t follow the “norm.” Of course, it got it wrong… AGAIN, which consequently worked out well for our experiment in showing you. 🙂 This one reveals how flawed things could be if someone used AI for even a basic 50 States series:

SPLIT INTO TWO SCREENSHOTS BECAUSE IT WAS LONG. IT CONTINUES…

To summarize: “The Fall line region of SC has a higher concentration of dramatic waterfalls compared to other regions of the state” and then “In the Fall Line region of S.C., there aren’t many significant waterfalls,” and then, “The Blue Ridge region of S.C. is known for having a higher concentration of dramatic waterfalls when compared to other regions of the state.“ UH OH! The contradictions!

If an unknowing person doesn’t know to challenge the information being provided, then they only become the believer and perpetuator of flawed information. This is so dangerous we can’t even begin to put it into words.

We put it in a second time, and it gave us the right answer, instead. Crazy! We had many more examples, but we’ll try to make this short.

In one test, we had a conversation where we asked AI questions for ~5 minutes about a science topic. AI created theories based on its initial flawed theory, and it continued building upon it as if it was all true—even with additional scientific names. SCARY! I wish I’d thought to save the screenshot at the time, but it was early on, and I didn’t think about what a great example it would end up being. When we challenged it, it apologized and corrected the information, causing all of the follow-up scientific “facts” to crumble.

How frightening would it be if a child or parent was using it and didn’t know to challenge the information? WHOA!

Now, did AI always get it wrong in our tests? No. We ran a series of tests and asked numerous questions. Sometimes it got it right. Some, it got wrong. Sometimes, we asked the same question separate times, and the answers varied. That almost made it more frightening because whether right or wrong, AI responses have the same authoritative tone, leading users to accept its statements as fact—especially because it sounds so “fluent.”

So, the question is: how can you know what it’s teaching you or your child is accurate? The answer is simple: you can’t.

AI can also build upon concepts on its own in ten different directions, and it doesn’t even tell you they aren’t true until you challenge it. It can literally create ideas out of “thin air,” but it doesn’t tell you that it’s doing so.

At least on internet searches via Google, you can determine when something links to an opinion piece or a blog and can choose to take it with a grain of salt. With AI, however, the same is not true. To the unknowing teacher at home or curriculum maker who is planning for a lesson and doesn’t know to challenge a particular statement, how are they to know the difference between truth and untruth?

We only knew to challenge what AI was saying in the above conversations because we already put in the footwork and spent hours studying these concepts (things like South Carolina, Fall Lines, Roanoke, etc.) previously, while in each unit’s previous curriculum development.

As a consequence of AI errors, we are seeing flaws that are created when sources like Wikipedia or other tertiary sources were used by others. The history is wrong, the science is wrong, the facts are wrong… and you might never know it. But it sure sounds good…

If we, as parents, wanted flawed Google to teach our children then why would we purchase any curriculum at all? Just let the kids peruse the internet all day long, instead. Likewise, why would we purchase a curriculum that merely copied/pasted Google information? We know some companies may do this, but we are certainly not advocates. The same question, therefore, should be given for AI-generated material. It’s even more dangerous.

Now, I challenged AI to a debate of sorts, if you can believe it (it still feels weird to say that). The debate was about whether AI was sufficient for use in the world of academics. The debate itself actually proves the problem with AI more than this entire blog does, and I’ve got to admit, “it” held its own for a while. It was quite “intelligent.” The “conversation” was incredibly interesting, and I HIGHLY recommend you take a look if you’re up for it. It’s a PDF if you click HERE.

HUMAN FLAWS

To be fair, humans can and do also make mistakes when writing curriculum. We will make mistakes. Parents will make mistakes. Teachers will make mistakes. Experts will even make mistakes in their own careers. It happens. No one is perfect. It’s going to happen.

There is a difference, however, between a mistake and a flawed foundation.

Do you want the contractor who–in the process of doing things right–accidentally made your tile grout a little too dark or put a little hole in the wall (mistake; it happens)? Or, do you want the contractor who says it’s fine to build the house on sand without a foundation? That’s the difference we’re talking about. Again, I would encourage you to read the debate for further explanation.

POINT #2 IT HAS POTENTIAL FOR HURTING U.S. HOMESCHOOL & LAWS

We had someone else show this AI mistake. The user initially asked AI to do the following: Create a course description for ____________ [curriculum] based off the _______ [unit name] from _______________ [company]. The user needed this to give to their state officials. This was the response from AI:

The problem? This is NOT the proper course description or objectives of the curriculum in the user’s question. Rather, AI failed to understand the user’s intentions, and the user failed to understand AI’s output.

AI didn’t understand that XYZ curriculum already exists as a mainstream curriculum and that the parent was asking for a course description based on it. AI, therefore, attempted to design a custom course at random, based on the subject alone. The user also believed that AI gathered the data from available online sources (such as the table of contents or scope and sequence of the actual curriculum). This was not true at all, and a quick analysis would have quickly revealed this to the user. The curriculum in question doesn’t actually go into 90% of what is discussed above, as it took a totally different path. The user did not initially take the time to check because they were admittedly so “wowed” by the output of AI and fooled by the response that sounded so sure.

Parents are unknowingly using AI to create this “paperwork” or “course description” to turn into their state, and it’s not lining up with what was actually taught to their child! Homeschoolers can be “red flagged” as a result of this, setting a tone in the school authority realms that something in the homeschool community isn’t aligning. It rather seems like homeschool parents are turning in “course descriptions” and “objectives,” randomly lying about what their child is learning… with a complete lack of corroboration, and nothing that legitimately matches what was in the curriculum.

It’s not looking good. It’s not reflecting well on homeschoolers overall, either, and we’ve heard concerns from some parents that governments may start becoming more stringent with homeschoolers as a result.

Unfortunately, this could be devastating for some parents who don’t realize these downfalls and are using AI for transcripts incorrectly, as well, and some people could potentially get in trouble if they turn in flawed data like this to the state (depending on the state).

Side note: the same AI error often happens, we found, when AI is asked to summarize a document or tell you the overarching themes of something. In the end: it gets a lot right, but it also gets a lot wrong. Since it changes based on which session you’re in, you can’t know which is which.

So, how SHOULD you use AI, or should you use it at all? We’re going to break here and be back for PART 2 soon! This is far too deep an issue to discuss all in one sitting. This is undoubtedly going to be multiple parts because it is one of THE most important concepts in the world of education (and ethics) right now. Stay tuned, and sign up for our newsletter!!!